We present a novel framework for rectifying occlusions and distortions in degraded texture samples from natural images.

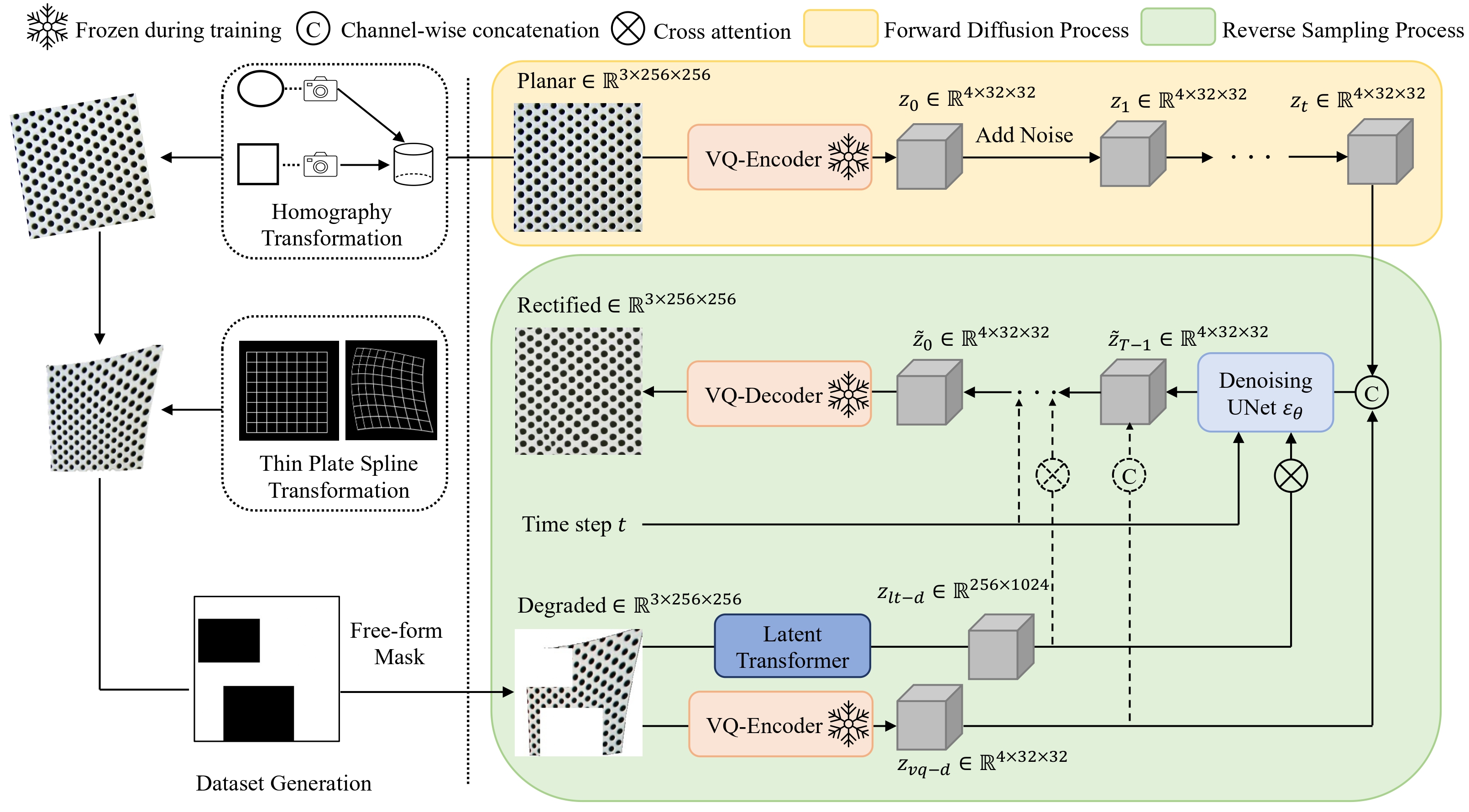

Traditional texture synthesis approaches focus on generating textures from pristine samples, which necessitate meticulous preparation by humans and are often unattainable in most natural images. These challenges stem from the frequent occlusions and distortions of texture samples in natural images due to obstructions and variations in object surface geometry. To address these issues, we propose a framework that synthesizes holistic textures from degraded samples in natural images, extending the applicability of exemplar-based texture synthesis techniques. Our framework utilizes a conditional Latent Diffusion Model (LDM) with a novel occlusion-aware latent transformer. This latent transformer not only effectively encodes texture features from partially-observed samples necessary for the generation process of the LDM, but also explicitly captures long-range dependencies in samples with large occlusions. To train our model, we introduce a method for generating synthetic data by applying geometric transformations and free-form mask generation to clean textures.

Experimental results demonstrate that our framework significantly outperforms existing methods both quantitatively and quantitatively. Furthermore, we conduct comprehensive ablation studies to validate the different components of our proposed framework. Results are corroborated by a perceptual user study which highlights the efficiency of our proposed approach.

Our synthetic training dataset is constructed by applying random geometric transformations and free-form masks on planar textures. During the training phase, our framework takes as input both degraded and planar textures, and performs forward diffusion and reverse sampling processes. Upon completion of the training, our approach takes as input a degraded texture sample and outputs a rectified texture.

@Inproceedings{HaoSIGGRAPHASIA2023,

author = {Guoqing Hao and Satoshi Iizuka and Kensho Hara and Edgar Simo-Serra and Hirokatsu Kataoka and Kazuhiro Fukui},

title = {{Diffusion-based Holistic Texture Rectification and Synthesis}},

booktitle = "ACM SIGGRAPH Asia 2023 Conference Papers",

year = 2023,

}